Table of content

Statistical modeling is a vital area of data analytics. By applying various statistical models to the data, data analysts can perceive and interpret the information more clearly and deeply. Rather than pondering over the raw data, this methodology allows them to discover relationships between variables, predict events based on available data patterns or trends, and use visualization tools for the smart representation of complex data models to help stakeholders understand it.[lwptoc skipHeadingLevel="h1,h4,h5,h6"]The role of data scientists is to build data models and writing machine learning codes and data analysts uses these statistical models to extract fruitful insights from data. That’s why analysts who are gearing up to shape their expertise in statistical modeling must grasp a concept of these methodologies and know where and how to apply them.

Statistical Modeling in a Nut Shell

A statistical model collects probability distributions on a set of all plausible conclusions of an experiment.

It is the mathematical approach that involves the process of applying statistical modeling and analysis to datasets. Building a statistical model means discovering or establishing a mathematical proportionality between one or more random variables and other non-random variables. By applying statistical modeling to raw data, data scientists use data analysis as a strategic method and provide intuitive visualizations that help discover latent linkage between variables and make predictions.To make statistical models, data comes from a variety of public sector sources, including:

- Internet of Things (IoT) sensors

- Survey data

- Public health data

- Social media data

- Imagery data

- Satellite Data

- Passengers Data

- Consumers Data

- Student Record, etc.

Statistical Modeling Techniques

The initial step involved in building a statistical model is data gathering, which may be driven using various sources like spreadsheets, databases, data lakes, or the cloud. The second most crucial part involves data analytics consisting of supervised learning or unsupervised machine learning methodologies. Some renowned statistical algorithms and approaches include logistic regression, time series, clustering, and decision trees.

Supervised Learning

Regression models and Classification models fall into the category of Supervised learning methodology.

Regression model

This predictive model is used to analyze the relationship between a dependent and an independent variable. Common regression models include logistic, polynomial, and linear regression methodologies. Practical uses include forecasting, time series algorithm, and discovering the causality between variables.

Classification model

It is the type of machine learning methodology by which an algorithm analyzes an available, huge and complex set of known data points to acknowledge and appropriately classify the data in different classes based on its nature. The most common approaches include decision trees, Naive Bayes, Nearest Neighbor, Random Forests, and Neural networks. All these methodologies contribute to modern Artificial Intelligence (AI) approaches.

Unsupervised learning

This domain includes methodologies of clustering algorithms and association trees, discussed below:

K-means clustering

It piles a certain number of data points into a specific number of groupings based on similarities.

Reinforcement learning

It is the area of deep learning that deals with the models iterating over several cycles, assigning weights to the steps that produce favorable outcomes, and abandoning moves that produce false results, therefore training the algorithm to learn optimized attributes and data points.There are three main types of statistical models: parametric, nonparametric, and semiparametric:

- Parametric: defined as a group of probability distributions that has a finite number of parameters.

- Nonparametric: defined as a domain in which the number and attribute of the parameters are flexible and not predetermined.

- Semiparametric: It shares both a finite-dimensional component (parametric) and an infinite-dimensional component (nonparametric).

How to Build Statistical Models

The initial and most crucial step in building a statistical model is acknowledging how to choose a statistical model depending on several variables. The sole purpose of the analysis is to address a very certain question or problem or to make accurate predictions from a set of variables. Here are few questions that need to be kept in mind while choosing a model.

Also Read: How to Build an Effective AI Model for Business

- How many descriptive and dependent variables are there?

- What is the nature of the relationships and proportionality between dependent and descriptive variables?

- How many parameters must be added to the model?

Once these questions are answered, the appropriate model can be selected.

- Once you select a statistical model, the next step is to build the model. This process of building a model involves the following steps.For the sake of simplicity, start by pondering over the univariate data attributes. Visualize the data to identify errors and understand the nature and behavior of variables you’re using.

- Build a prediction table using visible and readily available data attributes to observe how related variables work together and then evaluate the outcomes.

- Move one step forward by building bivariate descriptives and graphs to identify the strength and nature of relationships that the potential predictor creates with every other predictor and then evaluate the outcome.

- Frequently outline and evaluate the results from models.

- Discard non-significant relations first and ensure that any variable that possesses a significant association with other variables must be included in the model by itself.

- While studying and discovering the overwhelming relationships between variables and categorizing and testing every fruitful predictor, refrain from deviating from the research question.

Applications of Statistical Models

Here are some of the most common applications of statistical models.

Spatial Models

Spatial analysis is a kind of geographical analysis intended to demonstrate patterns of human behavior and its spatial expression in terms of mathematics and geometry. Some practical examples include nearest neighbor analysis and Thiessen polygons. The co-variation of attributes within geographic space is known as Spatial dependency; properties at proximal locations appear to be correlated, either positively or negatively. Spatial Modeling is done by dividing an area into many similar units, normally grid squares or polygons.

The resultant model may be connected to a GIS for data input and visualization. This approach finds location-oriented insights and patterns by overlapping geographic and business data layers onto geographical maps. It allows us to visualize, analyze, and get a different perspective and a 360-degree view of existing data to solve complex location-based problems.

Time Series

Time series is defined as an ordered sequence of values of a variable at equally spaced time intervals. The time-series analyses in categorized into two approaches:

- Frequency-domain methods - include spectral analysis and recent wavelet analysis.

- Time-domain methods - include auto-correlation and cross-correlation analysis.

The usage of time series models is twofold:

- Acquire an understanding of the impactful events and structure that produced the observed data

- Adjust a model and start forecasting, monitoring or feedback, and feedforward control.

Time Series Analysis is different areas such as:

- Economic Forecasting

- Sales Forecasting

- Budgetary Analysis

- Stock Market Analysis

- Process and Quality Control

- Inventory Control

- Utility Studies

- Survey Analysis

Survival Analysis

Survival analysis is an area of statistics that analyzes the expected time frame taken by one or more events to happen, such as the death of biological organisms and failure in mechanical or electrical equipment. This area is termed reliability theory or reliability analysis in various fields, such as engineering, duration modeling in economics, analysis of causes and consequences of events in history, and human behavior analysis in sociology.Experts use Survival analysis to answer questions like:

- What is the percentage of a population which will survive past a certain time or certain catastrophic event?

- At what rate will entities likely die or fail in case of any catastrophic event?

- Can multiple causes of death or failure be taken into consideration?

- How do certain factors or parameters increase or decrease the probability of survival?

Actuarial science experts and statisticians use survival models, and marketers are designing customer engagement models.Survival models are also used to predict time-to-event, such as time taken by a virus to start spreading to turning into a pandemic or modeling and predicting decay.

Market Segmentation

Market segmentation, also known as customer profiling, is a marketing strategy that revolves around dividing a huge target market into subsets market segments based on demographics, consumers, businesses, or psychology that have, or perceived to have common demands, requirements, hobbies, and priorities, and then designing and stationing strategies to meet them.

Market segmentation strategies are generally used to outline and define the target market and provide insights from data to develop smart marketing plans. The four types of market segments are represented in a visual below:

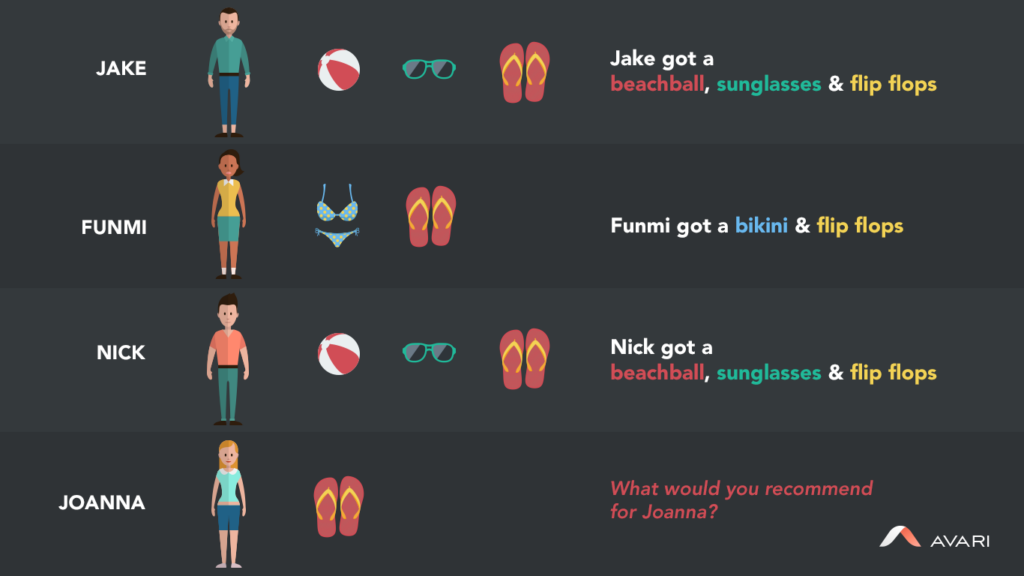

Recommendation Engines

Recommendation engines are defined as a subclass of information filtering methodology that tends to predict the ‘rating’ or ‘preference’ that a user is more likely to give to a certain product or service based on data analysis.

Recommendation engines or systems are becoming a new norm for users to get exposure to the whole digital world using their experiences, behaviors, priorities, and interests. For instance, consider the case of Netflix. Instead of browsing through thousands of categories and movie titles, Netflix allows you to navigate a much narrower selection of items that you are most likely to enjoy. This feature allows you to save both time and effort by delivering a better user experience. With this feature, Netflix reduced cancellation rates, allowing the company to save around a billion dollars a year.

Association Rule Learning

Association rule learning is a method for uncovering useful relations among variables in huge databases. For instance, the rule {Milk, Bread, Eggs} ==> {Yoghurt} found in the sales data of a superstore would show that if customers buy Milk, Bread and Eggs together, they are likely to buy Yoghurt.

In fraud detection, association rules are used to extract patterns linked with fraudulent activities. Association analysis is carried out to outline additional fraud cases. For example, suppose a credit card transaction made by user A was used to make a fraudulent purchase at store B by analyzing all shopping and transaction activities related to store B. In that case, we might identify fraudulent activities linked with another user, C.

Attribution Modeling

An attribution model refers to a principle or set of principles that dictate how credit for sales and conversions is dedicated to touchpoints in conversion paths. By analyzing each attribution model, you can get a better idea of the ROI for each marketing channel.A model comparison tool enables you to analyze how each model distributes the value of a conversion. There are six common attribution models:

- First Interaction

- Last Interaction

- Last Non-Direct Click

- Linear

- Time-Decay

- Position-Based

Google Analytics uses last interaction attribution by default. However, you can compare different attribution models in your account.

Scoring

Predictive models can predict defaulting on loan payments, risk of accident, client, or chance of buying a product or services. The scoring model is a special kind of predictive model that typically uses a logarithmic scale. Each additional 50 points in your score reduce the risk of defaulting by 50%. The foundational basis of these models relies on logistic regression and decision trees, etc. Scoring technology is mainly used in transactional data and real-time scenarios like credit card fraud detection and click fraud.

Predictive Modeling

Predictive modeling utilizes statistics to predict outcomes. The event one wants to predict is in the future, but predictive modeling can be applied to any unknown event, regardless of its time frame. Predictive modeling is the foundational base of some applications above and can be used for weather forecasting, predicting stock market trends, predicting sales, etc. Military strategists can also use it to predict the enemy’s next move based on the previous records.

Neural networks, linear regression, decision trees, and naive Bayes are some of the methodologies used for predictive modeling. It is carried out by maintaining a machine learning model and improved using the cross-entropy technique. It doesn’t necessarily need to be a statistical approach but also a data-driven methodology.

Clustering

Clustering is the methodology linked with grouping a set of attributes or data points so that data points in the same segment known as clusters are more similar to each other than those in separate segments or clusters. It is the primary technique used in data mining and a common practice for statistical data analysis, used in various areas, including machine learning, image recognition, speech recognition, pattern recognition, and bioinformatics.

Unlike supervised classification, which is discussed below, clustering does not require training sets, but some hybrid methodologies are known as semi-supervised learning.

Supervised Learning

Supervised learning is the machine learning methodology of developing a function by enabling a model to learn from a labeled training set ( A part of the data set with which the machine is unaware). The training data consist of a set of training samples. In supervised learning, each sample is a pair based on an input object which is usually a vector, and the desired output value is also known as label, class, or category. A supervised learning algorithm feeds over the training data, analyzes patterns and associations, and finally produces an optimized function to map new and unseen data samples known as a test set.

An ideal scenario requires the algorithm to determine the class labels for an unseen data set accurately. Like predictive modeling, the training phase is improved using the cross-entropy technique to produce a highly optimistic function for accurately recognizing the test set.

Extreme Value Theory

These characteristic values are the smallest (minimum value) or largest (maximum value) and are known as extreme values. Extreme value theory or extreme value analysis (EVA) is an area of statistical modeling that deals with the extreme deviations from the median of probability distributions. It is intended to analyze random variables in a sample and the probability of more extreme events than any previously thought or experienced, both minimum and maximum. Consider an example of catastrophic events that occur once every 1000 or 500 years. Mathematically, it states that if a function f(x) is continuous on a closed interval [a, b], then f(x) has both a maximum and minimum value on [a, b].

Conclusion

Statistical modeling and analysis are used extensively in IT, science, social sciences, and businesses. As well as testing hypotheses, statistics can provide a probability for an unknown outcome or event that is difficult or sometimes impossible to measure or even assume.There are still some limitations just like in some social science areas, such as the study of human consciousness and rational, irrational, or random human choices and decisions, are practically impossible to measure, but statistical modeling and analysis can shed light on what would be the most likely or the least likely to happen based on existing data, information, knowledge, cases or wisdom.Despite having some limitations and downsides, statistical modeling is still in use and producing extraordinary results in various fields, but there is still much space for improvement and advancement in this respect.