Explore the Boundless World of Insights and Ideas

Latest blogs

5 Best Shopify ERP Integration Solutions for 2024

As an e-commerce business, you understand the importance of operational efficiency. So you optimize your order fulfillment process by automating a labor-intensive workflow.

Thankfully, your Shopify e-commerce store integrates with ERP software to give fruitful results. This transformative move consolidates all your business processes in one place. You get better financial oversight, streamlined operations, and improved inventory management.

This article helps you choose the best Shopify ERP integration without any research. Our top 5 picks leverage advanced technologies to meet evolving e-commerce needs. Read on to find the answers you’ve been looking for.

Why You Need to Integrate Your Shopify Store with an ERP Solution?

Without a Shopify ERP integration, your Shopify store handles inventory, orders, and payments. In contrast, your ERP takes care of the supply chain, accounts, and HR.

Integrating your ERP solution with your Shopify store unlocks higher operational efficiency. The integration synchronizes data from your e-commerce store and all other business operations. You can:

- Perform data entry across all channels

- Access all your data and information in one place

- Manage supply chain and inventory

- Handle accounts, HR, payable, and other operations

- Process orders and payments

Challenges Faced without a Shopify ERP Integration

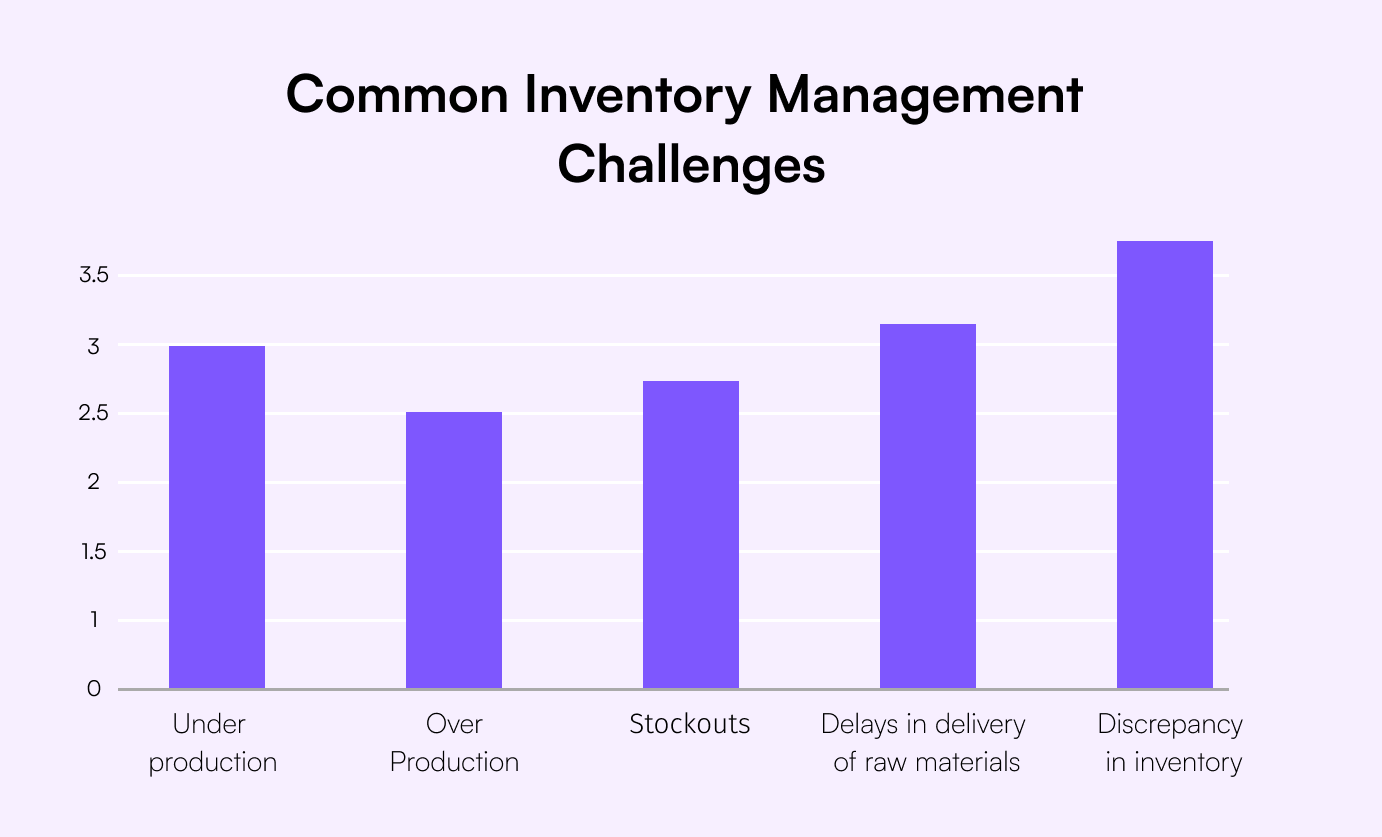

Let’s say you are running your ERP independent of your Shopify store. You have to manually manage your inventory counts and update them in the ERP system. Then, you have to insert the same information in your e-commerce store.

When your business grows and sales go up, manual data entries will increase. With more items in your inventory, keeping a manual count becomes labor-intensive. This leads to inventory discrepancies and manual data entry errors. Moreover, your eCommerce operations become inefficient and difficult to scale.

How ERP Integration with Shopify Gives You a Strategic Advantage

An e-commerce business has numerous operations running simultaneously. Imagine having complete oversight and control over each of them. That’s the kind of power you get by integrating an ERP with your Shopify store.

Real-time Data Analysis

You can analyze sales trends and, expenditure patterns, and calculate profit margins using your ERP. It can generate financial reports automatically, saving you time and money. Modern ERP solutions offer advanced real-time data analysis features. This means you can take full control over your eCommerce finances. All you need to do is integrate the ERP with your Shopify store.

Automation

E-commerce businesses have to process and move large amounts of data each minute. According to 67% of organizations, ease of use is one of the most important features of an e-commerce platform. This is made easier with the use of an ERP system. Leveraging automation, you can streamline data exchange between different channels and touchpoints. This eliminates human errors in customer data, shipping details, inventory, and accounting.

Centralized Control of Business Processes

What’s better than having all your business data and communications under one platform? A Shopify ERP integration centralizes your store data and ensures hassle-free communications. This means all departments and channels can use real-time data and enable flexible solutions. Without this integration, every department manages its data separately.

As a result, when teams collaborate, there is the hassle of duplicate data and repetitive requests for information. With an ERP integration, you can create a secure, efficient, and error-free business workflow.

Choosing the Right ERP Integration for Your Shopify Store

Like any other business solution, ERP integrations are available in abundance. However, choosing the right one is the key to enhancing workflow efficiency. So, the following are the factors you need to consider for a successful integration.

Compatibility

First, you need to know whether the ERP system is compatible with your ecosystem. It needs to align with your business processes and goals. For instance, if your business has a high order volume, you’ll need a more efficient system.

It should be able to process large data volumes to fulfill orders quickly. You also need to determine whether the ERP system offers industry-specific features. The more the solution is tailored to your specific industry needs, the better.

Scalability

You’re not running your e-commerce business to stay where you are. Tomorrow, the business will become an enterprise. So, scalability is one of your top priorities. The ERP integration you choose must be able to cope with the changing volumes of data.

It should come with the necessary upgrades to adapt as your business grows. This means having technical support and continuous updates to the system. Not to mention, the process of updating the ERP should be easy for your teams.

Customizability

Not all Shopify integration solutions support the customizations on your e-commerce store. If you’re the kind of brand that loves customization, you must consider the right solution. Some regular integration strategies won’t work for you.

In that case, you need to consider tailored ERP solutions that allow a certain degree of customization. That way, you can get the most out of your Shopify ERP integration.

Support

Like any other tech solution, ERP software is often too complex to understand. Not everyone operating the system will be an expert. So you need to consider a service provider that offers reliable support. This includes technical support, training, and customer support.

You must be able to get assistance in setting up and using the integration effectively. But that’s not all. When it comes to streamlining your business processes, customer support plays a huge part. Consider this your top priority if you want to smoothen your integration process.

Cost

Initially, you might feel that investing in a new ERP integration solution is adding to the cost. However, in the long run, you will see that it's a fruitful investment. You get full control over your inventory, which allows you to order supplies efficiently. You only spend on what is needed.

That being said, you still need to consider the best value for money. Choose an ERP solution that offers the best price for features that fulfill your requirements. At the end of the day, it’s your business that matters.

Adaptability

With artificial intelligence reshaping e-commerce integrations, adaptability is key. You need a solution that works - both now, and in the future. With AI and machine learning, customer engagement and data analytics will be better than ever. Invest in an ERP solution that is designed to cope with these future trends.

5 Best Shopify ERP Integration Solutions for 2024

XStak E-commerce ERP

XStak is an all-in-one, self-service ERP integration solution for e-commerce businesses. It transforms your business with omnichannel capabilities through multiple productivity modules. You get more than 50 integrations with the best ERP and e-commerce solutions. That means you can seamlessly integrate and customize your Shopify store.

What’s more? XStak’s cloud-native and modular architecture makes it a scalable and hassle-free solution. A powerful cloud-based POS, coupled with an omnichannel order management system keeps you ahead of the competition. You also get marketing, payments, and business intelligence tools with a transaction-based pricing plan.

Inventory management is a breeze with real-time, multi-location synchronization of online and offline channels. The integrated warehouse and logistics management system automates order packing and delivery. Whether you consider scalability, customization, or support, XStak checks all the boxes. And it also prepares your business for the future with an AI-driven e-commerce assistant.

Dynamics 365 Business Central

Dynamics 365 is a cloud-based solution by Microsoft known for its CRM capabilities. It offers various tools for marketing, sales, project management, and marketing. With financial tracking and project service automation, you can streamline your business processes.

The ERP integration solution goes beyond basic CRM capabilities to offer data-driven insights. As a result, you can improve efficiency with the help of predictive analytics. If service automation isn’t enough to convince you, there’s more. Microsoft Dynamics 365 is a future-proof solution that comes with AI, machine learning, and mixed reality tools.

Brightpearl

Brightpearl is another cloud-based ERP solution that comes with a comprehensive set of tools. Designed to serve small and medium e-commerce businesses, the solution handles multiple operations. These include inventory management, sales, purchasing, customer relationship management (CRM), and accounting.

What makes Brightpearl stand out from the competition is its real-time inventory management system. The functionality updates your inventory counts across all channels. This means whenever a purchase is made, the inventory is updated in your e-commerce store, physical store, and marketplaces.

Acumatica ERP

When it comes to managing your e-commerce operations efficiently, Acumatica ERP is a great choice. It is an ERP software that provides various business management applications. The cloud-based solution offers financial management with project accounting and distribution management.

Acumatica ERP also offers customer data management as well as manufacturing management. All the data is recorded in real-time. This provides you with real-time visibility into your business processes, which in turn helps make data-driven decisions.

NetSuite ERP

NetSuite ERP is another popular choice among e-commerce businesses. Most commonly integrated with Shopify, the ERP solution offers all the great business management functionalities. On top of the CRM, HRM, and inventory management features, you get high customizability.

You can even download data from a given period of dates. The user-friendly dashboard makes business management a breeze. Whether it is accounting or order management, all your data is accessible anywhere with cloud storage. However, the license cost of this solution increases with an increase in users.

How to Implement an ERP Integration with Shopify

- Identify your business requirements: Regardless of the ERP integration you choose, you need to lay out your specific business requirements first. This is done by analyzing your business data and the complexity of your operations. You also need to look at pain points and set a goal to be achieved.

- Clean your business data: Before implementing a new ERP integration, it is important to clean, normalize, and validate your data. You also need to eliminate duplicate entries and review who can access each data set.

- Select middleware: Next, you need to select a middleware solution to integrate your Shopify store with the ERP system. Middleware solutions automate the integration process. These include Zapier, Jitterbit, and Dell Boomi.

- Configure integration: The ERP configuration process requires you to map data fields between the two systems. In this step, you need to define workflows and synchronize your business data.

- Testing and deployment: Upon testing the integration within your e-commerce system, you can proceed with deployment. For this, you need to perform data synchronization, test workflows, and train your teams.

Future Trends in ERP and E-commerce Integration

Increasing Automation with AI

As AI and machine learning techniques become more advanced, they will continue to enhance e-commerce processes. We can expect higher levels of automation when it comes to ERP integrations. This will be seen particularly in the areas of automated data entry, order management, and inventory management.

Increased adoption of Cloud-based ERP Solutions

Here’s another future trend that we can already see coming true. E-commerce businesses love cloud-based ERP solutions. Thanks to their flexibility and cost-effectiveness, these solutions will see even higher adoption in the coming years. We see numerous businesses transforming their operations with cloud-based solutions. Not only do these systems offer efficiency but they are also easier to integrate into your Shopify store.

Key Takeaways

In a world of automation and centralized business processes, Shopify ERP integration is a necessity. It lays the foundation for a successful e-commerce enterprise. By bringing together data from different channels, it improves efficiency and team collaboration. Not only that, but you can also add more value to customer journeys.

To avoid the complexity of integrating an ERP solution, it is important to choose the right tech partner. Shopdev provides unmatched technical expertise to help e-commerce businesses reach new heights.

Omnichannel Excellence: How an OMS Transforms Retail with Cegid Integration

Running a retail business in a world of cross-functional teams and agile organizations is tricky. Your customers expect a convenient shopping experience regardless of the platform or medium they use. And all this can only mean one thing - a heated competition for omnichannel OMS excellence in retail.

The key to winning this competition is omnichannel retail.

It's a strategic approach that enables retailers to provide a seamless customer experience across all channels.

Naturally, for retailers, cross-channel consistency is critical. Selling your products through online channels alone is not enough.

Read on to find out how you can take your retail business further with a leading OMS and Cegid integration.

Omnichannel Excellence in Retail Commerce

According to research, 50% of customers search for products through online marketplaces. This means brands that run only brick-and-mortar stores have already lost a lot of leads.

Moreover, 59% of customers like shopping through social channels. And a further 71% like to complete the checkout from within the social channel.

You need to be present everywhere and provide consistent services across multiple channels. This is where omnichannel retail comes into play. Incorporating an omnichannel OMS like XStak order management system allows you to create a seamless customer experience across all devices and touchpoints.

The power of XStak OMS shines through when integrated with Cegid’s cloud-native commerce platform. While XStak OMS synchronizes inventory across all channels, Cegid Retail improves customer engagement. You can offer a personalized, seamless omnichannel experience to customers anywhere in the world.

Consistency Across Channels

No customer enjoys a shopping journey where the brand experience varies from one channel to another. Moving from a social marketplace to a retail website must be a seamless and consistent experience. Otherwise, today’s customers will waste no time before deflecting to another brand.

Incorporating XStak OMS with Cegid Retail integration helps you achieve omnichannel OMS excellence. Your customers get a cohesive brand experience regardless of the devices, channels, or touchpoints they use. They are also able to view consistent product catalogs and availability statuses throughout their journey.

Integration of Services

Keeping up with the fast-paced retail environment requires a high level of automation and accuracy. Your eCommerce business cannot rely on separate third-party applications to run its operations.

Fortunately, omnichannel order management systems have service integrations that help streamline and automate operations. Integration of services within an OMS allows retailers to improve performance and reduce operational costs.

For instance, XStak’s omnichannel OMS comes with integrations for payments, logistics, ERP, marketplaces, and more. This means you can provide seamless services across multiple sales channels. Using a single OMS, they can perform all tasks from product search to payment processing, checkout, and delivery.

Similarly, Cegid Retail integrates with top-tier tools and solutions to enhance the retail experience. Examples include Expensya integration for expense management and Booxi for appointment scheduling.

Personalization

Every time we hear about a successful retail brand, we see a common offering - tailored experiences. With AI-based data analysis, retailers can utilize customer data like never before. The better the data analysis, the better the personalization.

Personalization helps retailers achieve omnichannel excellence by building trust and loyalty.

And what’s better than having an OMS that personalized customers’ experiences? The answer is an OMS with Cegid Retail integration.

XStak’s OMS analyzes customer data to create personalized shopping experiences. Customers can view real-time inventory and track their orders from any location.

Simultaneously, Cegid Retail improves engagement with a consistent experience across various channels. The integration helps improve your team’s efficiency so customers can enjoy a smooth experience.

Real-Time Accessibility

Whether you consider order creation, inventory, or order fulfillment, you need real-time updates. Moreover, this real-time data should remain consistent across all your channels. Real-time inventory visibility helps avoid inconveniences related to product availability in different locations.

Similarly, real-time synchronization of customer data helps achieve omnichannel excellence. Your retail brand can deliver the same experience even when the customer chooses to shop using another channel.

Here, the integration of Cegid Retail with XStak OMS is particularly beneficial. It allows Cegid Retail to access real-time information. As a result, order processing and fulfillment are made as efficient as possible. With accurate metrics to work with, you can make well-informed business decisions.

Cegid Retail is a stable, secure, and accessible unified commerce platform that enables you to run your business operations worldwide. Whether it is day or night, your customers get the same brand experience.

Customer-Centric Approach

To make it big in retail, your operations must begin and end with the customer. The journey must be optimized starting from the time the customer engages with your store for the first time.

An OMS helps achieve this by consolidating data from various touchpoints and providing a centralized view within one dashboard. By enabling customers to browse products online and complete their purchases in-store, you can improve engagement tenfold.

Order management systems like XStak centralize customer data for all of your online and offline stores. Based on their shopping behavior and preferences, you can run targeted promotions and loyalty programs. Moreover, a centralized inventory allows you to provide realistic fulfillment times to customers.

These features of the OMS are further improved with Cegid Retail integration. It provides you a single view of inventory for all your store locations and enables your brand to achieve omnichannel OMS excellence.

The Power of Integration: Cegid and Omnichannel OMS

The story of omnichannel excellence doesn’t end by just incorporating the right OMS into your system. You also need to know how to run your business based on the omnichannel model. This is where Cegid comes in. Cegid provides cloud-based management solutions to implement your retail projects.

In simple terms,

- XStak OMS provides you with the tools to enable omnichannel retail.

- Cegid provides you with the expertise to manage your omnichannel model.

This integration allows efficient order management, fulfillment, customer data management, personalization, and much more.

Cegid’s cloud-native POS

The primary aim of Cegid’s cloud-native POS is to provide a unified commerce platform to retailers. Bringing together and centralizing the data from all sales channels, Cegid helps boost profitability, increase operational efficiency, and improve customer engagement.

With Cegid’s integration, you can accelerate your omnichannel transformation and optimize your supply chain. It’s even better if you have physical stores. Cegid helps improve the in-store customer experience with the help of employee training for better sales.

Omnichannel OMS

XStak’s distributed order and inventory management system is your key to synchronizing your offline and online channels. The omnichannel solution ticks all the right boxes by providing real-time insights.

Automating inventory tracking across multiple stores is just the beginning. You get end-to-end order management, meaning you have clear visibility of the whole process right from the time an order is placed until it is delivered.

When it comes to omnichannel excellence, XStak’s OMS streamlines and automates your operations to save time and reduce errors.

Enhancing Customer Experience

Today’s customers look for more than just their desired products when they visit your brand. They expect tailored experiences and minimum effort to process their order. A successful retail brand is one that understands these changing customer needs and delivers accordingly.

Streamline Customer Journeys with Cegid and Omnichannel OMS

Here is how an omnichannel OMS with Cegid integration improves customer experience:

- Analyze customer preferences and behavior using data from all channels using the OMS

- Personalize the shopping journey by tailoring the interactions and recommendations at every step

- Run targeted marketing campaigns with personalized emails and advertisements

- Leverage Cegid to extend personalization beyond online channels

- Bridge the gap between online and offline retail outlets by training staff through Cegid Retail

- Integrate customer services to ensure that support agents have access to real-time customer data

- Enable order customization and personalized fulfillment as per customers’ demands

Retail Challenges with Omnichannel Solutions

- DSW, Inc. (Designer Shoe Warehouse) is an American footwear retailer that implements the omnichannel model. It is considered one of the best omnichannel retailers, as it provides highly relevant search results. Customers can access tailored information as well as availing order-online-and-pick-up-in-store facility.

- Similarly, Abercrombie & Fitch has implemented an omnichannel model to overcome purchase barriers. Apart from online and in-store pickup, they keep cart contents consistent across all customer devices. This makes it easy for customers to browse on one device and check out from another later on.

- The renowned fashion brand Zara has an omnichannel where customers can shop online, via mobile app, and in-store. But the shopping experience remains consistent. The brand has also incorporated RFID tagging to improve supply chain efficiency and improve customer experience.

Operational Excellence and Efficiency

As a retail business you are used to selling through different channels. So the key to achieving operational excellence is having one platform to manage all sales channels. Adopting an omnichannel OMS allows you to consolidate orders from multiple channels. As a result, you can manage and fulfill orders more efficiently.

Similarly, adopting an omnichannel communication system allows you to seamlessly integrate emails, social media apps, and SMS communications. You get a unified system to handle all your channels, regardless of their type or location.

Optimizing Operations with Cegid and Omnichannel OMS

L’azurde, a prominent jewelry brand in the Middle East, has achieved omnichannel excellence with the perfect solutions. The brand’s decision to incorporate XStak OMS with Cegid integration has significantly improved customer journeys. Integrating Cegid’s POS system with the OMS allowed L’azurde’s in-store staff to access online orders and process payments for efficient fulfillment.

This perfect marriage of XStak OMS and Cegid POS has also provided convenience to L’azrude’s customers in the form of:

- Multi-site fulfillment

- Buy Online, Pick up In-Store (BOPIS)

- Reserve and Collect

- In-store Sales via tablets

The jewelry store’s operations now face no hindrances or siloes. They create memorable customer experiences through consistent journeys across all channels.

Empowering Teams for Better Performance

Achieving omnichannel OMS excellence is impossible without a well-trained team. Therefore, you need to align your team with your omnichannel strategy. Ensure that they understand the intricacies involved in delivering a cohesive customer experience.

Cegid provides the right tools and training to your employees for this purpose. With team collaboration and training tools, they help coordinate in-store operations. Your staff gets a sense of shared responsibility to deliver an omnichannel customer experience.

Competitive Edge in Retail with Omnichannel Strategies

Overall, we can see the retail industry adopting the omnichannel strategy to stay ahead of the competition. Based on customer expectations and market trends, personalized shopping experiences are the key to success. They help build brand loyalty and improve customer lifetime value.

Moreover, consolidated data from omnichannel platforms provides valuable insights to help retailers make informed decisions. The key to winning this competition is to maximize the physical presence by synchronizing the online channels using an OMS.

Meeting Consumer Expectations

In the highly competitive retail industry, it's the survival of the fittest. You must be ready to strategically handle customer demands while providing a seamless experience. Modern retail supply chains depend on automated inventory management systems for improved visibility and tracking.

Omnichannel customers spend 15 to 30% more than single or multi-channel customers.

When you have an omnichannel retail platform, you can run as many online and offline stores as you want. The more the stores, the better the customer experience. Customers will have more touchpoints to interact with and will enjoy the convenience. Meanwhile, your brand can benefit from higher traffic and sales.

Key Takeaways

Omnichannel excellence is prevailing as a transformative approach that can help retail businesses stand out. It gives a whole new dimension to seamless customer experiences by providing new ways for interaction.

Not only that, but omnichannel management systems seamlessly integrate various channels and touchpoints to ensure maximum customer engagement.

Undoubtedly, this creates delightful shopping experiences that result in lasting customer loyalty.

At shopdev, we help retail brands overcome the challenges associated with customer experiences and provide effective omnichannel strategies to overcome them. Check out this case study to learn more about our expertise in revolutionizing retail operations and elevating customer experiences.

Custom Software Development Lifecycle: From Idea to Implementation

Every so often, business leaders find themselves at a crossroads, adapt, or get left behind. One critical adaptation is investing in custom software, tailored to unique business needs. But diving into the world of software development can be daunting, especially when it feels like uncharted waters. So, how does one transition from a brilliant idea to a functional software solution?

The answer is simple, familiarize yourself with the Custom Software Development Life Cycle (SDLC). Today, having a digital edge isn't just an advantage — it's a necessity.

This article discusses the 7 stages of the custom software development lifecycle in detail. You’ll get to learn the methodology, significance, and importance of each phase, as well as standard SDLC models. Our guide is designed for both seasoned business magnates and start-up trailblazers to ensure you make informed decisions. Now, let’s start our journey with the basics!

What is SDLC?

Software development life cycle (SDLC) in the true sense is a systematic process for developing highly efficient software. The process guides the development team to design and build software that meets and fulfills end-user requirements. The objective here is to minimize the risks and margin of error by dividing the procedure into different phases. Subsequently, each phase has its objectives and deliverables that feed into the subsequent phase.

What are the phases of SDLC?

The phase of the Software Development Life Cycle (SDLC) keeps changing over time as the industry is involved. The purpose of SDLC phases is to provide a consistent and systematic approach to software development. As a result, they ensure that all functional and user requirements are met as per set standards.

When it comes to custom development, 7 stages of the software development lifecycle include:

- Idea generation and conceptualization

- Requirement analysis

- System design

- Implementation or coding

- Testing and quality assurance

- Deployment and release

- Maintenance and continuous improvement

The 7 Stages of Custom Software Development Lifecycle

Embarking on a custom software development journey is akin to constructing a building. The process requires careful planning, execution, and maintenance on your part as well as your custom development partner. Below is a roadmap containing 7 stages of the software development lifecycle that you must pay attention to.

Infographic content:

7 Stages of Custom Software Development Lifecycle

- Idea Generation and Conceptualization

- Requirement Analysis

- System Design

- Implementation or Coding

- Testing and Quality Assurance

- Deployment and Release

- Maintenance and Continuous Improvement

Idea Generation and Conceptualization

At the heart of every innovative software lies a seed: an idea, a solution to a problem. This is where the journey begins. So, ask yourself what problem your software is solving or what value it's adding. Is it a novel tool for users or an automation of a manual process in the retail industry?

Evaluating the feasibility of your idea in the market is pivotal so pay attention to detail.

The initial phase of the software development lifecycle is all about brainstorming and gathering insights. So, define the broad objectives of your software project for better conceptualization. It’s wise to gather two cents from your stakeholders, business analysts, and potential users to shape the vision.

Discuss and talk about questions like:

What problem are we addressing?

What's the potential market size?

What are the initial features?

If possible, conduct feasibility studies to assess the technical, economic, and operational viability of your software idea. By the end of this phase, you’ll be able to sketch out a preliminary concept of the software. This initial SDLC phase will help you dive deeper into specific requirements of software development.

Pro Tips

- Market Research: Understand the needs of your target audience and the current solutions available.

- SWOT Analysis: Analyze the strengths, weaknesses, opportunities, and threats of your software idea.

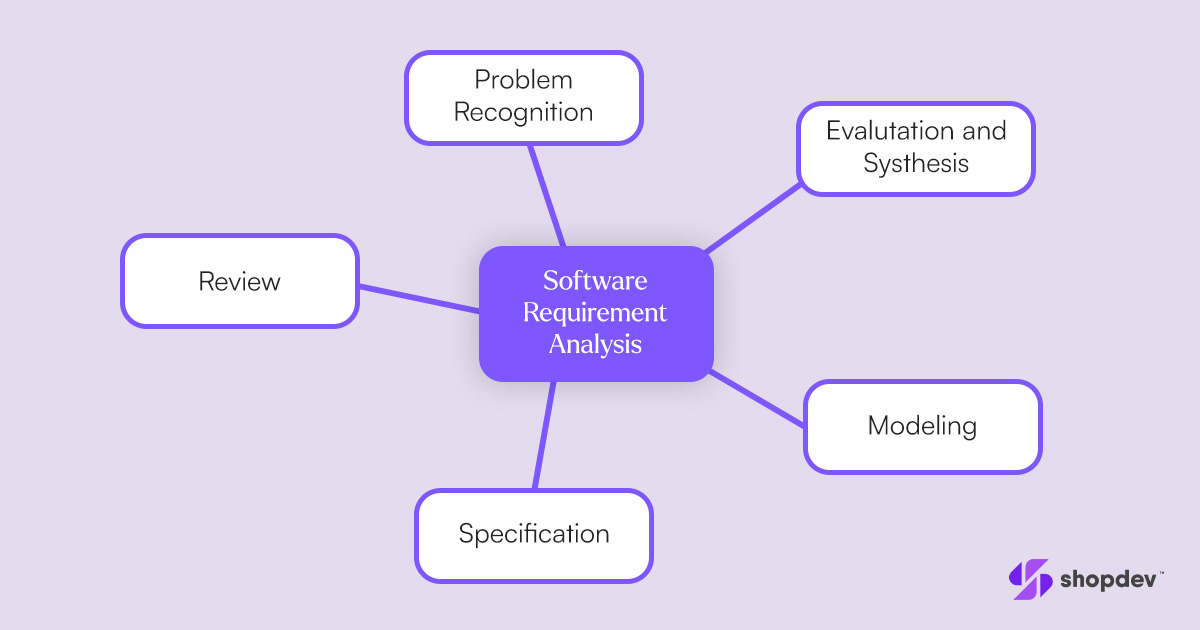

Requirement Analysis

Once your software idea is crystal clear, it's time to delve into the nitty-gritty. The second phase of SDLC involves gathering detailed requirements from stakeholders and understanding user needs. The requirement collection process will help you outline every function, feature, and constraint the software must possess. These could be:

Functional (what the software should do)

Non-functional (performance, security, or user experience)

For a better requirement analysis, you can conduct surveys, workshops, and interviews. And don’t forget to create a Requirement Specification Document as it’ll become the reference for subsequent phases. In essence, this stage lays down a clear roadmap of "what" the software should achieve.

Pro Tips

- Engage Stakeholders: Regular discussions with business teams, potential users, and tech teams.

- Document Everything: Use tools like Confluence or Jira to maintain a detailed record.

System Design

Transforming requirements into an actionable design is the heart of the System Design phase. In this stage of the software development lifecycle, architects and designers draft detailed blueprints for the software. These blueprints, often graphical, indicate how different software components will interact, data flow diagrams, database design, and more.

Two major activities involved are:

- High-Level Design (HLD)

- Low-Level Design (LLD)

High-level design involves outlining the main modules including their structure, components, and relationships. Low-level design delves deeper into each module, describing its functions and procedures in detail. Such a comprehensive system design ensures developers have a clear path to follow in the next phase.

Pro Tips

- Prototyping: Create mock-ups or wireframes to visualize the software.

- Feedback Loop: Regularly share designs with stakeholders to ensure alignment.

Implementation or Coding

The Implementation phase of SDLC, commonly known as coding, is where the rubber meets the road. Using the design documents as a guide, developers begin writing code in the chosen programming language. This is one of the most crucial phases in the 7 stages of the software development lifecycle.

Single or more than one team of developers develop each module of your custom software. The major part of the design phase is coding, but developers may also perform initial unit testing. The testing helps them ensure that individual components of the software work as intended. In this stage, the main focus remains on coding conventions, clarity, and comprehensiveness to ensure the software is both functional and maintainable.

Pro Tips

- Version Control: Use tools like GIT to manage code versions.

- Code Reviews: Regular reviews to maintain code quality and catch errors early.

Testing and Quality Assurance

Before deploying, it's vital to ensure the software is bug-free and performs as expected. That’s why QA and testing is considered the heart and soul of custom software development. The quality assurance teams often use manual and automated testing tools during the QA process. They do rigorous testing against the requirements defined from functional and performance testing to security audits.

The whole testing phase is all about ironing out the kinks to ensure the software works smoothly. The most crucial testing methods are:

- Unit testing (individual components)

- Integration testing (interconnected components)

- System testing (the software as a whole)

Pro Tips

- Automated Testing: Utilize tools like Selenium for repetitive and large-scale tests.

- Bug Tracking: Tools like Bugzilla can help in reporting and managing software defects.

Deployment and Release

Once tested rigorously and deemed ready for users, the software is deployed to a production environment. In this stage, the software is deployed on the intended platform and becomes accessible to the end-users. Depending on your custom software, this might be a full release or a phased one.

Deployment in phases is a good choice if there's a large user base or if risks are perceived. However, software release or deployment is not just about making the software available. Preparing user documentation, and training materials, and sometimes conducting training sessions are also part of this phase of SDLC.

Pro Tips

- Backup: Always keep backups before deployment to prevent any data loss.

- Monitoring Tools: Use them to observe software performance in real time.

Maintenance and Continuous Improvement

Software, however meticulously designed and developed, will inevitably face issues in the real world. That’s why maintenance is the most crucial phase in the 7 stages of the software development lifecycle. So, if you are of the opinion that post-deployment is the End of SDLC, the journey isn't over.

Feedback from users, changing business environments, or technological advancements may necessitate software tweaks. It's about addressing post-deployment bugs, updating the software to accommodate changing requirements or improving performance. Apart from reactive measures, this phase also focuses on proactive enhancements. This phase ensures that the software remains relevant and functional over time.

Continuous improvement might involve adding new features, refining user interfaces, or optimizing backend performance. Periodic updates and patches ensure the software remains relevant, efficient, and secure.

Pro Tips

- Feedback Channels: Open channels for users to report issues or request features.

- Regular Updates: Schedule them to enhance features, fix bugs, or improve security.

What is SDLC methodology?

SDLC methodology refers to the framework that organizations use to structure different phases of software development. In essence, an SDLC methodology is a set of practices, techniques, procedures, and rules used by those who work in the field. Over the years, several SDLC methodologies have been developed, with each offering a unique approach to software development.

Common SDLC Models

There are several custom software development lifecycle methodologies and each is a different process in itself. Below we are discussing the common SDLC models used in the industry for a custom software development lifecycle.

Agile Model

The agile model follows an iterative approach as it divides the software development process into smaller increments or iterations. In this SLC model, attention is paid to customer feedback throughout the custom software development lifecycle. There’s a regular collaboration between the developing team and the end user. Since the model follows adaptive planning, the approach allows for changes and adaptations throughout the project.

Advantages of the Agile Model

- Easily accommodates changes even late in the development phase.

- Continuous involvement ensures the product meets the customer's needs.

- Regular iterations allow for early detection and rectification of errors or changes.

Drawbacks of the Agile Model

- Requires active user involvement

- Can be costly

Waterfall Model

Contrary to the Agile model, this is a linear and sequential approach. In the Waterfall model of the custom development lifecycle, each phase must be completed before the next phase can begin. There's no overlapping or iterating of phases and the model emphasizes thorough documentation at each stage. All in all, it's a straightforward yet rigid process as changes are hard to implement once a phase is complete.

Advantages of the Waterfall Model

- Easy to understand and use, especially for smaller projects.

- Each phase completion is a distinct milestone.

- Ensures clarity and can be beneficial for future reference or projects.

Drawbacks of the Waterfall Model

- Inflexibility

- Late Detection of Issues

Iterative Model

The iterative model is considered ideal for those who are constantly updating features and functions. In this custom software development lifecycle, you start with a few basic features and keep improving through repeated cycles. Since each version is an improvement upon the last, it allows you to add features and fix issues.

Advantages of the Iterative Model

- Initial versions can be released to gather feedback for improvements.

- Even basic versions are often functional

- Allows users to engage with the software sooner.

- Allows you to test functions and features on the go.

Drawbacks of the Iterative Model

- Requires careful planning

- Can be time-consuming

Spiral Model

It’s the custom software development lifecycle approach that combines both iterative and waterfall models. The spiral model emphasizes risk assessment at each cycle and is ideal for complex software that requires regular improvement.

Advantages of Spiral Model

- Can be tailored to specific project requirements.

- Continuous risk assessments ensure potential pitfalls are identified early.

Drawbacks of the Spiral Model

- Complexity

- Can Be Expensive

Big Bang Model

Among all common SDLC models, the Big Bang model follows the most unorthodox approach. It requires minimum planning and the model involves following a vague idea while evolving as development progresses. In this model, the developer team starts coding with an explorative approach, allowing the software to take shape as they go.

Advantages of the Big Bang Model

- Offers developers a lot of freedom to innovate and try different approaches.

- Especially when the end goal isn't strictly defined.

Drawbacks of the Big Bang Model

- Unpredictability

- Potential for High Risks

Challenges and Best Practices for SDLC

The SDLC provides a structured framework for software creation, but navigating through its stages is not without hurdles. The custom software development lifecycle is a complex process during which multiple challenges can arise. However, recognizing these challenges and adopting best practices can significantly smooth the path.

Challenges in the Custom Software Development Lifecycle

The most common challenges that one might have to face are as follows.

Requirement Ambiguities

The primary challenge in the software development lifecycle is the clear and accurate gathering of requirements. Misunderstandings or vague requirements can lead to a product that doesn't align with the stakeholders' vision.

Scope Creep

As the development progresses, additional features or changes might be introduced, leading to a constantly expanding project scope. This can delay delivery times and inflate budgets.

Technical Debt

Sacrificing quality for speed in the early stages can result in a pile-up of "quick fixes" or inefficient solutions. This technical debt can become a significant issue in later development stages.

Integration Hiccups & Testing Complexities

Integrating different software components, especially when developed simultaneously or by different teams, can bring forth unforeseen compatibility issues. Additionally, ensuring comprehensive testing that covers all possible use cases can be a daunting task.

Best Practices for a Smooth SDLC

The best way forward is to prioritize in-depth sessions with all stakeholders at the beginning of the project. It’s better to utilize questionnaires, interviews, and workshops to extract as much detail as possible. Furthermore, you should try to:

Document Everything

From the initial concept to the final system design, ensure that every decision, change, and functionality is well-documented. This will provide a clear roadmap for developers and future maintenance or iterations of the software.

Incorporate Iterative Feedback

Regardless of the SDLC model adopted, build in regular feedback loops with stakeholders and potential end-users. The approach will help in the early detection of misalignments, ensuring the final product resonates with user expectations.

Invest in Code Reviews

During the custom software development lifecycle, regular code reviews can drastically improve code quality. They help in identifying inefficiencies, potential bugs, or deviations from best coding practices. You should adopt a multi-faceted testing approach including unit testing, integration testing, performance testing, and user acceptance testing.

Plan for Post-Deployment

Planning for post-deployment includes monitoring the software in its real-world environment. This can help you address any emerging issues and you can gather user feedback for further refinement.

Stay Updated with Technology Trends

The tech landscape evolves rapidly in the digital world, especially when it comes to the SaaS industry. So, stay informed about the latest technologies, tools, and best practices to leverage cutting-edge solutions. This way you can enhance software quality and get an edge over your competitors. The key to success is choosing a highly skilled development partner for custom software building.

Conclusion

Custom software development lifecycle is a structured journey through various stages. By understanding and meticulously following all 7 stages of the software development lifecycle, entrepreneurs can make informed decisions. You need to consider the key factors to ensure that your software not only meets its intended objectives but also stands the test of time.

AI in Digital Marketing: Effective Use Cases

Marketing is becoming a vital aspect of any company's business domain. It allows the company to thrive and expand. Without the right marketing strategy and roadmap, it would be almost impossible to attract and engage customers, which can cause a notable impact on the growth and even decline the revenue streams. Therefore, it is crucial to stick with the contemporary marketing trends to stay ahead of the rivals and perform better.

Modern times have brought incredible evolution to the marketing industry. The Internet has opened up many new opportunity corridors, and people are now focusing more on digital marketing than conventional marketing practices. In fact, digital marketing occupies almost half of the total marketing shares. One of the most notable advancements in digital marketing is integrating artificial intelligence and machine learning in marketing practices to ensure rational decision-making and smart effort in the right direction.

Use Cases of AI in Digital Marketing

AI for Automated Processes

Human insight is still crucial despite the skyrocketing trend of adopting AI technology. AI is becoming a primary aspect of a modern paid media strategy. By automating the iterative tasks, you can spare a lot of time and money that can be utilized elsewhere in the core business operations. Investing less time on iterative tasks can also boost productivity and performance.

AI for Content Creation and Curation

Producing quality content is a primary aspect of establishing authority within your industry or niche. Ensuring authority improves organic rankings and transparency in the Search Engine Results Pages - SERPs. Conventional Marketers may be reluctant to let AI take the controls and generate content autonomously, but this technology is closer than one may think. The New York Times, Reuters, and Washington Post already use AI to generate content. The Press Association, for instance, can now produce 30,000 local news articles a month using AI. You might think that these are formulaic like who, what, where and when stories, and you are right; some of them certainly are. But now, AI-produced content has expanded beyond formulaic production to more creative writing domains such as poetry and novels.

The methodology that automatically produces a written narrative from data is called natural language generation (NLG), which is already used for different content generation needs from business intelligence dashboards, business data reports, personalized email, and in-app messaging communication to client financial portfolio updates, etc. Now digital marketers will have more time to work on strategic growth plans, face-to-face meetings, and other core business areas where human assistance is more valuable than AI.

AI to Predict Customer Behavior

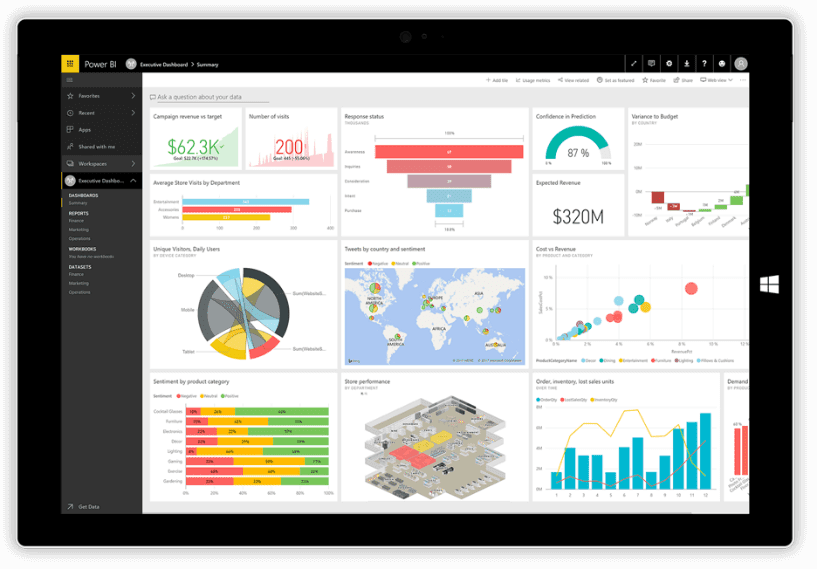

Predictive analytics is another of the most exciting use cases of AI for digital marketers. Many predictive analytics companies encourage users to visualize and analyze data in a secure environment, such as Microsoft Power BI, a self-service platform introduced for non-technical business users. Now you don't need to be an expert in big data to adapt to the new technology.

Microsoft Power BI

The accuracy promised by the AI tool is so overwhelming that you can predict customers' future behaviour based on past behaviour, data, and statistical models. In this aspect, AI is not only saving time but ensuring precision. When customers' actions can be predicted accurately, you can showcase them with highly personalized ads and notifications that will lead them through a buying funnel that improves engagement and boosts sales. In the same way, AI-based digital marketing can let you acknowledge your target market segment. Outlining your Ideal Customer Profile - ICP is crucial to extract the most out of your marketing budget because spending time on leads that aren't going to buy is a waste of time and money. As far as self-evaluation is concerned, Modern AI tools can analyze your sales history and quickly determine the firmographics and demographics to let you know where you can focus your efforts.

AI for Better Personalization

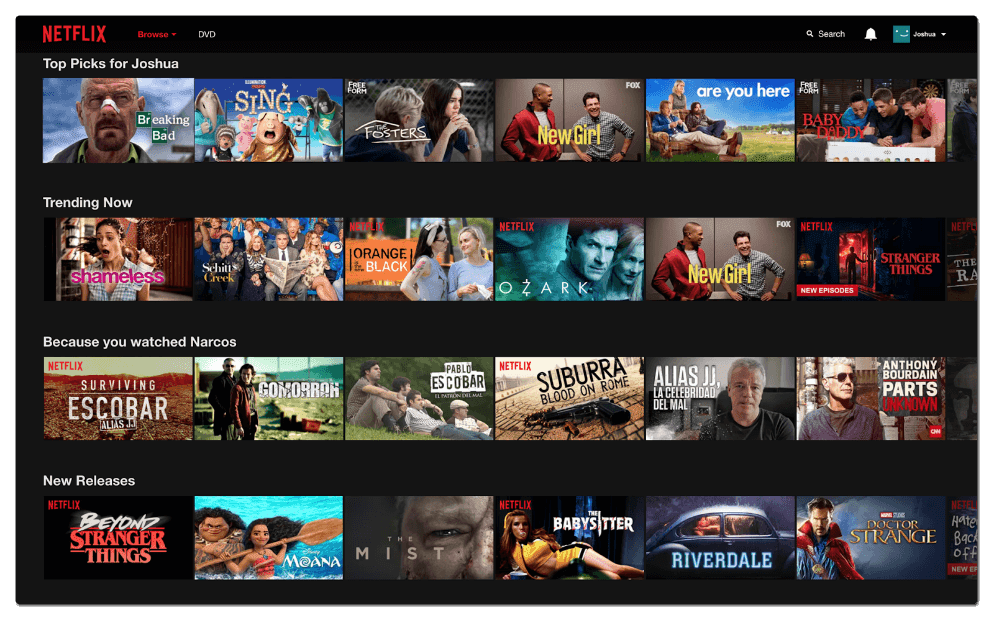

Personalization is no longer just a trendy buzzword as personalized messaging, as it is quickly becoming a norm. Most online consumers expect brands to personalize their messages, whether personalized based on matching their location, demographics, or certain interest. Many consumers won't even give attention to messages that are not personalized. By analyzing consumer data and behaviour, AI algorithms can actively personalize CX's customer experience to ensure consistent engagement and interest. Recommendation engines are one of the most prominent examples of AI technology by virtue of which you can easily adapt a more fruitful marketing strategy by knowing your customers' buying habits and interests. You can most commonly witness this technology used on eCommerce platforms and OTPs. Consider an example of Netflix. Their goal is to keep you interested in their subscription service. Before and after you finish your favourite season or movie, Netflix pushes recommendations and Top Picks for you to keep you engaged and refrain from cancelling.

The average user doesn't know about Netflix using AI to learn their watch history – they'll just be excited to start watching Dark as soon as they finish Stranger Things.

Amazon uses AI greatly by providing personalized recommendations based on buying history, items you have viewed or liked and items in your cart, etc. These recommendations are more useful once a user is already in the final stage of the purchasing funnel.

AI chatbots and Digital Marketing

Different AI methodologies such as semantic recognition and natural language processing are intended to improve customer experience and deliver a better response. Compared with manual customer service, AI chatbots have many perks. First of all, AI chatbots allow businesses to go one step further than the conventional "one-to-one" form of customer service. Instead, chatbots can promise "one-to-many" support by interacting with several customers simultaneously and in different time zones. Secondly, AI chatbots don't need to rest as humans do to answer customer queries 24/7 and in real-time with the same accuracy and speed. Not only does this mean they are highly efficient, but they can also deliver services outside of office hours. Last but not the least, customers can opt for the language used by AI customer service as per their needs and feasibility, allowing a brand to deliver a personalized customer experience. Many brands have begun to interact with their customers using messenger applications like WhatsApp, Facebook Messenger, and Slack, all of which can benefit from AI chatbots in order to automate the process and improve customer experience without involving human effort.

Chatbots for Customer Service

Chatbots, powered by AI, can communicate with customers in real-time, providing instant responses to inquiries and support requests. These virtual assistants are capable of handling a wide range of tasks, from answering FAQs to guiding users through a purchase process, enhancing the customer experience while reducing the workload on human customer service representatives.

Predictive Analytics

Predictive analytics leverages AI to forecast future customer behaviors based on their past actions. By analyzing data patterns, AI can identify potential future purchases, allowing marketers to tailor their strategies to nudge the customer towards a sale. This proactive approach in marketing ensures that customers are presented with products or services they are likely to need, improving conversion rates.

Content Creation

AI is now able to generate basic content, such as news articles, social media posts, and even some types of image content. While it may not replace human creativity, AI can significantly speed up content production, providing marketers with a tool to create more content in less time. This is particularly useful for maintaining a consistent online presence and engaging with audiences regularly.

SEO and Content Strategy

AI tools can analyze search trends and user queries to inform SEO and content strategies. By understanding what users are searching for, marketers can tailor their content to meet these needs, improving their visibility in search engine results. AI can also suggest topics that are likely to attract attention, helping content creators to stay ahead of trends.

Social Media Insights

AI's ability to process and analyze large datasets extends to social media platforms, where it can monitor mentions, sentiments, and trends related to a brand. This provides marketers with valuable insights into public perception, allowing them to adjust their strategies in real-time to capitalize on positive trends or mitigate negative feedback.

Email Marketing

In email marketing, AI is used to personalize email content for individual recipients, test different subject lines, and determine the optimal send times to increase open and click-through rates. By continuously learning from user interactions, AI can enhance the effectiveness of email campaigns, ensuring that messages are more relevant and engaging to each recipient.

Voice Search Optimization

As voice search becomes more popular, AI is helping marketers optimize their content for voice queries. This involves understanding the natural language patterns used in voice searches and ensuring that content provides clear, concise answers to these queries. Optimizing for voice search can improve a brand's visibility in voice search results, a growing segment of online searches.

FAQs on AI in Digital Marketing

Q1: What is AI in digital marketing?

AI in digital marketing refers to the use of artificial intelligence technologies to improve marketing strategies and efforts. This includes personalizing content, optimizing ad campaigns, automating customer service with chatbots, and much more, all aimed at enhancing efficiency and engagement.

Q2: How does AI personalize marketing content?

AI personalizes marketing content by analyzing user data like browsing history, purchase behavior, and preferences. It uses this information to predict what content or products a user might find interesting, creating personalized experiences for each individual.

Q3: Can AI write blog posts or create content on its own?

Yes, AI can generate basic content like blog posts, social media updates, and simple images. However, it's best used as a tool to assist content creators by providing suggestions or drafting content, rather than replacing human creativity entirely.

Q4: How do chatbots improve customer service?

Chatbots handle inquiries and support tasks 24/7 without human intervention, providing instant responses to customers. They can answer FAQs, guide users through processes, and even handle basic transactions, improving the overall customer experience.

Q5: Is AI in digital marketing expensive to implement?

The cost varies depending on the scale and tools used. Some AI tools and platforms offer cost-effective solutions for small to medium-sized businesses, while more advanced implementations might require a significant investment. However, the return on investment can be substantial due to increased efficiency and engagement.

Q6: How does AI help with SEO and content strategy?

AI tools analyze search trends and user behavior to suggest content topics and optimize for search engines. They can identify what users are searching for and help create content that matches these queries, improving visibility and engagement.

Q7: What is predictive analytics in marketing?

Predictive analytics uses AI to analyze data and predict future customer behaviors, such as potential purchases. Marketers use these insights to tailor their strategies, targeting users with content and offers that match their predicted needs.

Q8: Can AI manage social media accounts?

While AI can't fully manage social media accounts, it can help by scheduling posts, analyzing engagement data, and providing insights into trends and sentiment. This aids in creating more effective social media strategies.

Q9: How does AI optimize email marketing campaigns?

AI optimizes email marketing by personalizing emails for each recipient, testing different subject lines, and determining the best times to send emails. This increases the chances of emails being opened and acted upon.

Conclusion

AI is revolutionizing digital marketing by providing tools and technologies that enhance the efficiency and effectiveness of marketing strategies. From personalization to predictive analytics, AI is enabling marketers to engage with their audiences in more meaningful ways. As AI technology continues to evolve, its impact on digital marketing is expected to grow, offering even more opportunities for innovation and engagement. Marketers should stay informed about AI developments to leverage these technologies fully, ensuring they remain competitive in the ever-changing digital landscape.

14 Magento Problems & Effective Solutions for Smooth Operation

Magento is one of the largest and most successful eCommerce platforms. Since Magento is a feature-loaded platform, it can be challenging to navigate, but you can find solutions to most Magento-related problems if you know where to look (Yes, a guide like this one). There are problems that everyone encounters when they work with an e-commerce platform, and some of them are complex problems, while others may be easier to solve. Only because you work with a powerful and complex platform like Magento doesn't mean you can't solve the problem without an expert.

We have mentioned the most typical Magento issues and have also provided the appropriate solutions to make things easier for you. We will discuss the common issues in the Magento 2 version in the current blog post, along with their helpful solutions for Magento store owners, Magento developers, and Magento development companies.

14 Common Magento Issues and Their Smart Solutions

I've listed down possible Magento issues (particularly with Magento 2 – technical and non-technical) and their solutions; feel free to add a problem (with or without its solution) in the comments section below, and we'll add the helpful ones to the list below.

1. Slow Loading Speed

2. High Server Load

3. Security Vulnerabilities

4. Poor SEO Performance

5. Difficulty in Updating Magento

6. Compatibility Issues With Extensions

7. Complicated Checkout Process

8. Mobile Responsiveness

9. Product Image Issues

10. Configuration Issues

11. Payment Gateway Problems

12. Shipping Method Limitations

13. Data Migration Challenges

14. Multi-Store Management

1. Slow Loading Speed

Problem: A slow-loading website can significantly affect user experience and SEO rankings.

Solution: Optimize images, leverage browser caching, and use a content delivery network (CDN). Consider implementing Magento's built-in caching features and using advanced caching techniques like Varnish.

2. High Server Load

Problem: Magento can be resource-intensive, leading to high server load and slow response times.

Solution: Upgrade your hosting plan to one that suits your store's needs, preferably a dedicated or cloud-based solution. Also, optimize your database and regularly clean up logs.

3. Security Vulnerabilities

Problem: Magento sites are often targeted by hackers, leading to potential security breaches.

Solution: Regularly update your Magento version and installed extensions. Implement strong passwords, two-factor authentication, and secure connections (SSL certificates).

4. Poor SEO Performance

Problem: Magento's default settings might not be optimized for the best SEO performance.

Solution: Use SEO-friendly URLs, create a sitemap, and optimize product pages with relevant keywords. Magento SEO extensions can also automate many of these tasks.

5. Difficulty in Updating Magento

Problem: Updating Magento, especially major versions, can be complex and risky.

Solution: Always backup your store before attempting an update. Use a staging environment to test the update before applying it to your live store. If possible, consult with or hire a Magento specialist.

6. Compatibility Issues With Extensions

Problem: Not all extensions are compatible with every version of Magento, which can lead to conflicts and issues.

Solution: Only use extensions from reputable developers and ensure they are compatible with your Magento version. Test extensions in a staging environment before deploying them live.

7. Complicated Checkout Process

Problem: A lengthy or complicated checkout process can lead to cart abandonment.

Solution: Simplify the checkout process as much as possible. Consider using Magento's one-step checkout extensions to streamline the process.

8. Mobile Responsiveness

Problem: A Magento site that is not mobile-friendly can lead to a poor user experience and lower search engine rankings.

Solution: Use a responsive theme and test your site on various devices and screen sizes. Magento 2 offers improved mobile responsiveness.

9. Product Image Issues

Problem: High-quality images are essential for eCommerce, but they can slow down your site if not optimized.

Solution: Compress and optimize images without losing quality. Use Magento's built-in image optimization tools and consider lazy loading for images.

10. Configuration Issues

Problem: Incorrectly configuring Magento can lead to various operational issues.

Solution: Familiarize yourself with Magento's documentation and consider hiring a Magento expert for initial setup and configuration.

11. Payment Gateway Problems

Problem: Payment gateway integration issues can affect your store's ability to process transactions.

Solution: Ensure that your payment gateway is fully compatible with Magento. Test the payment process thoroughly in a staging environment before going live.

12. Shipping Method Limitations

Problem: Magento's default shipping methods may not meet all your business needs.

Solution: Use third-party shipping extensions to offer more options and flexibility to your customers. Customize shipping methods based on location, weight, and other factors.

13. Data Migration Challenges

Problem: Migrating data from an old eCommerce platform to Magento can be daunting and risky.

Solution: Use Magento's Data Migration Tool and consider hiring a professional to ensure a smooth transition without data loss.

14. Multi-Store Management

Problem: Managing multiple stores from a single Magento installation can be complex.

Solution: Properly utilize Magento's multi-store capabilities. Set up and manage each store's configurations carefully to ensure they operate independently as needed.

Conclusion

Developing and maintaining a Web store in Magento can be a crucial challenge, particularly for startups. You might experience several obstacles, and this blog post included some of the common and potential errors, challenges with their solutions that you could face while working on the Magento website. Feel free to get in touch with us if you have any queries setting up the Magento store or need custom Magento development for your business.

How Enterprises Benefit from a Magento-to-BigCommerce Migration

Managing an eCommerce enterprise store is no walk in the park, and neither is choosing the right platform for that purpose. Magento has long been considered one of the most powerful and customizable platforms for eCommerce enterprises. However, today we find enterprise owners debating the need for a Magento to BigCommerce migration.

Today’s customers expect seamless transitions between online and offline shopping channels. Whether you consider scalability, seamless integrations, or omnichannel capabilities, the industry simply does not accept any compromises.

In this blog, we examine the limitations of Magento and detail the best practices enterprises adopt for migrating from Magento to BigCommerce.

Why You Should Migrate from Magento to Bigcommerce

Magento has its Limitations

- Since Magento is a self-hosted platform, your business faces challenges in managing, hosting, and maintaining the servers.

- Magento does not provide technical support, meaning your site is exposed to security breaches leading to unexpected downtime.

- Without seamless integrations, the extensions in Magento’s marketplace are not always compatible with your system.

- Magento brings challenges in terms of scalability as your offline and online channels in different regions are not in sync.

- The cost of managing multiple stores on Magento is very high if you consider hosting, security, and development resources.

BigCommerce Saves the Day

- The cloud-based infrastructure of BigCommerce takes away your burden. The fully hosted platform takes care of hosting and server management for you.

- BigCommerce also reduces the administrative burden on your business by providing technical support and updates whenever required.

- BigCommerce backend seamlessly integrates with your storefront to provide your customers with the best shopping experience.

- With BigCommerce, your physical and online stores in different locations are connected to provide you with real-time insights under one dashboard.

- Since BigCommerce operates on a subscription-based model, the cost of running your enterprise store is much lower than it is using Magento.

When it comes to security, it’s a completely different story over at BigCommerce. The robust security of the platform makes it one of the most reliable infrastructures for your enterprise. Not only that, but BigCommerce also offers world-class performance and uptime, meaning you never have to worry about backing up or restoring your data.

It comes with a number of strong SEO tools that help your enterprise rank higher up. These tools allow you to edit page titles, headers, meta descriptions, and URLs.

How Awesome GTI Benefitted from the Migration to BigCommerce

According to Wayne Ainsworth, eCommerce Manager at Awesome GTI, “Our entire migration process from Magento to BigCommerce only took a few months. That was a great turnaround time for us because we were still 100% operational during this entire transition process.

Since making the switch, we’ve had 1.3 million sessions, and 4 million pageviews — and it’s consistently growing. Our online store’s performance is remarkable: bounce rate is reduced, session duration is up, and daily sessions are increasing. The growth we wanted to see is there.”

Planning the Migration Process

The Magento to BigCommerce migration consists of a few steps that are quite specific, but it is a straightforward process. You might even find more than one way to perform the migration, but every method involves backing up, moving, and updating your data.

Conduct a thorough assessment of the existing Magento setup

Before taking the first steps of the migration process, you need to identify the features and functionalities that you need while moving forward. An easy way to do this is by categorizing your existing system’s features into important, secondary, and not required.

Defining migration goals and Key Performance Indicators (KPIs)

Here are some examples of KPIs you can use to plan your migration:

- Improving site performance

- Enhancing user experience

- Adaptability to market changes

- Making your ecosystem more scalable

- Cutting down the cost of web hosting

- Connecting physical and online channels

Identifying potential challenges and developing a mitigation strategy

When it comes to switching between platforms for your eCommerce enterprise, the data migration part is the most risky one.

Your data includes your products, passwords, orders, and other saved information. You need to consider each of these data types individually so that you don’t leave anything behind.

- Product data consists of information about individual products, grouped products, and their configurations.

- You have to take care of the passwords for customers’ accounts on your eCommerce site. These are not easy to import and you are most likely going to ask your customers to create new ones.

- Not just orders, but everything linked to the orders must be transferred to the new platform to avoid inconvenience.

- You also need to consider the personal details of customers saved on your Magento site so that this doesn’t cause any problems moving forward.

A third party would never know your products like you do. This means you will have to put in some extra effort and communicate your objectives clearly. Another important part of planning your migration is taking it slow. The more time you spend on planning, the less you will have to spend dealing with inconsistencies later on. Take your time to review site customizations, extensions, and anything else you will need in your BigCommerce platform.

Creating a detailed migration timeline and budget

Now you need to set a budget for your migration, which will depend on the list of features you have categorized as most important.

In this case, priorities define the sequence with which you will be developing your features after migrating to BigCommerce. But that’s not all, you also need to create a project timeline. Make sure to create space for testing and updating features where required.

The next step before moving towards data migration is creating a backup. You can do this by going into the Magento dashboard and selecting System -> Cache Management.

To back up your data, you can use one of two methods:

- Go to the File Manager in your web hosting control panel

- Use a File Transfer Protocol (FTP) application.

Data Migration Strategies

Transferring Product Catalogs, Order History, and Customer Data

Although creating your new BigCommerce account is easy, transferring your data is not. If your data transfer process goes wrong, your site performance will be affected for weeks, or even months. The complex migration process needs to be carried out in steps, where you should first transfer product catalogs and other information. In conjunction with the data transfer, you must continue to test the functionality before and after migration.

It is also advisable to start the migration and complete a light site design before moving forward. Once you have migrated your data, the next steps are setting up the integrations and developing the UI and UX for your eCommerce site.

Yes, the process is quite complex, which is why shopdev’s BigCommerce developers are here to assist you.

Data Mapping and Data Integrity

Ensuring data integrity and accurate data mapping is crucial during the Magento to BigCommerce migration. For instance, you have to map your existing data by entering the Magento Source Store information.

This includes entering the URL of your existing Magento store and installing the connector into your Source Cart. For accurate data migration, you need to provide a few more details such as your API Path, API token, and login credentials. As a result, you can receive the data you need from your Magento site.

Customization and Design Considerations

When it comes to eCommerce stores, the look and feel are some of the most important factors to consider. After all, the first thing your customers will notice when they visit your web store is the UI. As you might have guessed, there are some design differences between Magento vs BigCommerce. But here’s where things start getting tricky in your migration.

Design and Themes

If you are used to the high level of customization provided by Magento, you’re going to face some limitations with BigCommerce. While Magento is an open-source platform that allows developers to access the source code freely, BigCommerce does not.

The customizability in Magento is limitless, while BigCommerce, a SaaS platform, only allows you to adjust themes using some bits of code. On the plus side, BigCommerce currently offers 15 free themes and 100+ paid options, which are mobile responsive and easily customizable.

Replicating the Look and Feel in BigCommerce

With the design differences in mind, you still do not want to drift away from the original look and feel of your eCommerce store. In order to replicate the design and custom functionality of your Magento store in the BigCommerce platform, you need to choose the BigCommerce themes that nearly match the template and design you were using previously.

Upon selecting the right theme in BigCommerce, you can then proceed to customize it according to your brand’s design language. The BigCommerce theme editor allows you to make changes to the design of your store, such as adjusting the layout, colors, and fonts to replicate the theme of your Magento site.

Optimizing the user experience during and after migration

While your eCommerce store is migrating from one platform to another, your customers’ expectations remain the same. Therefore, it's important to maintain a great user experience during and after the Magento to BigCommerce migration.

First of all, you need to keep your customers informed of what’s to come. You can send out updates through social media channels or emails to inform them about possible downtime. However, downtime is something you will need to avoid. Make sure you implement redirects on pages that are not working while you migrate your site, and also take steps to maintain product URLs.

That’s not all, you also need to ensure that the mobile responsiveness and performance of your site are not compromised at any point. During the migration, your customer support team must be ready to answer any questions your customers might have. Finally, when you have migrated to BigCommerce, make sure to collect feedback from customers to pinpoint areas that need improvement.

SEO Considerations

SEO optimization is the first thing that comes to mind when you think of growing any kind of online store. For Magento users, migrating to BigCommerce is good news in terms of SEO. As BigCommerce offers some great SEO tools, your enterprise has a better chance of ranking higher on Google. Both Magento and BigCommerce allow editing page titles, alt text, images, descriptions, and URLs, but the SEO-friendly features of BigCommerce take you a step further.

The impact of migration on search engine rankings

Unless you carefully plan and execute each step of your migration, the process will take a toll on your SEO rankings. For instance, the URLs for your products and pages will change when you move your site from Magento to BigCommerce. Search engines will not be able to discover and index your product pages like before, and there might also be content duplication issues.

Not only that, but Google also looks for secure websites that have an SSL certificate. This means that if your Magento site has an SSL certificate, your BigCommerce site must have one in order to achieve the same SEO ranking.

301 redirects and best practices for preserving organic traffic

So how can you avoid the impact of the migration on your store’s SEO ranking? This is where 301 redirects come into play. When your product URLs change, you need to implement 301 redirects to map the old Magento URL with the corresponding BigCommerce URL. This helps Google understand the changes in page authority and register the new URL.

Apart from the 301 redirects, you also need a detailed SEO strategy that includes best practices to preserve your store’s organic traffic. This includes metadata optimization, continuous site performance monitoring, and keyword research. You will also need to create a new XML sitemap for your BigCommerce site to help Google discover your pages.

Testing and Quality Assurance

Once your Magento to BigCommerce migration is complete, you need to implement a thorough testing procedure. The BigCommerce store needs to be tested for bugs, errors, and responsiveness on both web and mobile platforms.

The importance of testing during and after migration

When you consider migrating any eCommerce site, the testing phase is often left out. However, testing is actually the most important part of the entire process. Unless you go through this process, you have no idea of the functionality of your new site.

This means you won’t be able to answer when a customer runs into a 404 error and asks you why the page isn’t working as expected. In order to avoid such an occurrence, you need to test the site’s functionality, such as browsing, carrying out product searches, adding items to the cart, and the checkout process.

Conduct user acceptance testing (UAT) to ensure a flawless customer experience